Current 2022, organized by Confluent, centered on the world of data streaming, billing itself as the inaugural data streaming event. It replaces Kafka Summit as the conference that brings together the ecosystem that has sprung up around open-source data streaming technologies and brings together academics, application developers, data professionals, SREs, and software/services providers.

The planners ensured there was good content for people just thinking about adopting data streaming to veterans sharing lessons learned from large-scale deployments, integrating streaming into an enterprise data architecture, or newly discovered use cases. And kudos to the team for broadening the discussion to include great sessions on one of the day’s burning questions: to batch or to stream?

Current 2022 was held in person and virtually on October 4th and 5th in Austin, Texas, but you can still register at no cost for the on-demand version of the event and get access to the content until November 7, 2022.

Streaming Data in Action

Using streaming data calls for much more than Kafka, so the Kafka Summit’s new name appropriately reflects that fact, as did the Day One keynote, where some of the enabling technologies and use cases were highlighted. Take Druid, for example. Apache Druid is an open-source database for real-time applications (i.e., require data streams). The founder of Apache Druid and of Imply, Gian Merlino, described how working with streaming data differs from traditional data sets. The emphasis is on change and events, and you don’t go back and change something from earlier in the stream (no UPDATEs). Gian pointed out, “Things just keep happening. It’s much more authentic to how life really works, and so it enables things to become really real time and enables software to get hooked into the ops of a business.”

Streaming platforms are not yet seeing wide adoption, but Gian mentioned a few successful use cases built on Kafka and Druid: internal observability, an advertising analytics platform implemented by Reddit, the Citrix Analytics service, and Expedia’s reliance on data streaming to make the real-time experience ubiquitous–it started with customer self-service, especially around canceling and changing reservations. Next came the customer’s expectation of receiving the right information over the right channel. Now there are 20 million conversations per year supported by the Virtual Agent Platform, as Anush Kumar, Expedia’s VP of Technology, explained during the first keynote.

The virtual Druid Summit on December 6th will be a good opportunity to learn more about Druid and the role it can play in a streaming platform.

See also: Apache Pulsar: Core Technology for Streaming Applications

Whether to Stream or Batch

A panel with the provocative title “If Streaming is the Answer, Why are We Still Doing Batch?” answered this provocative question with a robust discussion of when to use streaming data and when to use batch processing. And, yes, the fact that many of the techniques for consuming or landing streaming data include some kind of batching or micro-batching was considered.

The panel was hosted by Chris Jenkins and included Tyler Akidau, principal software engineer, Snowflake; Amy Chen, partner engineering manager, dbt Labs; Adi Polak, VP DevEx, Treeverse; and Eric Sammer, CEO, Decodable.

What made the panel so interesting was the interplay between streaming evangelists and pragmatic points of view. This sequence of statements is an example of their back-and-forth.

Tyler: It’s shocking how many people want their data in ten seconds, but when you [point out] the complexity and cost, they realize that 1 hour is enough.

Amy talked about an example of a marketing campaign failure: [There wasn’t] a real-time dashboard to look at a promo code. But the system could not respond in three hours. [We need to] ask ourselves what the business cost of not having streaming is–ask what the business impact is to non-technical users looking at the data.

Adi: Uber information is an event. In the end, it’s a combination of event-driven architecture and streaming, real-time data of vehicle location, but then it’s enriched with some batch data pricing.

Eric: The operational people and the analytics people are on different planes. Dynamic pricing systems, GrubHub, Uber, and Lyft wouldn’t exist in a batch world. Data is going to machines vs. people. Let’s make it all streaming.

In the end, everyone agreed that there were use cases where streaming was the best approach and worth the investment in human and financial resources, and, at the other end of the spectrum, there were use cases where batch truly sufficed–where there were no real-time expectations and little ROI to be gained–at least for now. New use cases are being discovered or invented rapidly, and these could accelerate the adoption of streaming data.

Why Is Streaming Data So Hard?

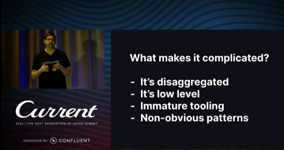

One more session to mention was an example of the broad view that Current 2022 took. “Streaming is Still not the Default: Challenges, Objections, and the Future of Streaming” by Eric Sammer, CEO of Decodable (and the original engineer behind Hadoop operations), was a careful look at the technical differences between streaming data and batch processing data pointing out the particularly and unnecessarily complexities. Make no mistake, Eric is a proponent of streaming data and predicts that sometime in the next five years or so, streaming will dominate because it will just make the most sense to process data continuously.

The current state of streaming data is that it is hard and expensive to do. Throughout the presentation, Eric did indicate how this situation could or will improve.

The challenges and differences between working with data streams vs. batch data are due to the immaturity of the supporting technology from systems to developer tools which have to be stitched together and require a lot of manual intervention. Specifically, you actually have to configure all aspects of how data is organized–Partitions, sizing, ordering, keys, and values, serialization, which, according to Eric, feels like defining a Java class. You have to define and engineer behavior and guarantees–messaging or processing. You are also on your own for managing state, restoring data, and then dealing with continual degradation due to heavy loads and failures. APIs are hard–different API layers, human-optimized vs. machine learning, taking on 30 years of query optimization. The most daunting is that there are as yet no full-featured SQL engines built into streaming tools, so when you write queries, you are taking on the challenge of recreating a long history of query optimization. Many sessions at Current 2022 give evidence that all of these are being worked on in open-source projects in the Kafka ecosystem, and a community of engineers from software vendors and businesses are collecting best practices and testing use cases to hone the technology.

Note that you can still register at no cost for the on-demand version of the event and get access to the content until November 7, 2022. And, of course, plan to attend Current 2023.

Elisabeth Strenger is a Senior Technology Writer at CDInsights.ai.