Retailers (both brick and mortar and eCommerce types) face numerous data challenges. They typically work in distributed application environments with data squirreled away in databases around the country or globe. Business demands in retail require a central view, and applications require access to that data. Everything from managing inventory and supply chain logistics to developing new marketing campaigns and promotions requires a single view of all relevant data. Lacking that view, retailers also miss the opportunity to improve customer engagement. Meeting the demands of today’s omnichannel shoppers requires a 360-degree view based on all data related to each customer.

One option is to bring all data into a single central database. Unfortunately, such an approach lacks the scalability needed to handle the explosion in the quantity of data used in retail operations. There are performance issues for geographically dispersed customers. Specifically, latency becomes an issue as the distance between customers and a central data repository increases. And then, there is the issue of a single source of failure. A system crash, natural disaster, or flooding in a building shuts everything down.

In today’s modern application development environments, a better alternative is to use a distributed database that delivers easy scalability, provides disaster resilience, and supports variable high transaction loads.

Critics of distributed databases often raise latency as a downside. A standard distributed database typically spreads data across all nodes to increase data survivability and availability. However, latency increases in parallel with the distance between data and requests.

CockroachDB does not sacrifice latency for strong consistency and improved survivability. By breaking data up and spreading it across multiple nodes, it increases survivability. However, this increases the distance between data nodes, thereby creating additional latency as the copies of data communicate with each other from afar.

Addressing regional compliance issues

When looking for a suitable database, an additional factor to consider is ensuring global data protection and compliance with ever-growing and varied local privacy requirements.

For example, a retailer operating in Europe must meet the General Data Protection Regulation (GDPR) requirements, an EU regulation concerning data protection and privacy aimed at protecting the data of all individuals within the European Union. Specifically, it tackles the export of personal data outside of EU territory. This regulation aims primarily to give control of their data back to EU citizens and residents. Any company that does business in the EU market must adopt GDPR rules.

Other nations have similar data privacy edicts. Japan’s data protection law, the Act on the Protection of Personal Information (APPI), requires businesses to prevent the unauthorized disclosure, loss, or destruction of personal data. It limits transfers of data to third parties unless the data subject consents.

There’s no equivalent of the GDPR or APPI in the United States. Instead, retailers and other businesses must navigate a mosaic of different state and federal rules, some of them varying widely, also governing some of the same issues, but there’s no central authority that enforces them.

For example, the Federal Trade Commission Act has broad jurisdiction over commercial entities under its authority to prevent unfair or “deceptive trade practices.” While the FTC does not explicitly regulate what information should be included in website privacy policies, it uses its authority to issue regulations, enforce privacy laws, and take enforcement actions to protect consumers.

At the state level, some of the major efforts that require adherence include the California Consumer Privacy Act (CCPA) and the New York Stop Hacks and Improve Electronic Data Security (SHIELD) Act. Both address the data protection and privacy of their respective citizens.

One additional challenge with meeting the mandates of such country-wide and state laws is that the regulations evolve over time. For example, the APPI has a built-in “every three years” review policy. Amendments made over time have brought the APPI closer in alignment with GDPR. And changes made in 2020 increase the obligations of companies to be transparent and secure with the personal data of Japanese residents.

Geo-partitioning data to meet data privacy regulations

One way to help address the varied data privacy requirements is to keep data local and manage to that locality’s rules and regulations. The way to accomplish this is to partition retail data by location, also known as geo-partitioning.

Geo-partitioning lets developers say where data should be stored at the database, table, and row level. For example, a developer can define the physical location where every row is stored to meet the many global privacy and compliance measures.

How does geo-partitioning work? Take a retailer that predominantly does business in the U.S. and Japan and has online storefronts in each country. The retailer could deploy a single, global database that uses geo-partitioning to keep the data for United States users in the United States and the data for their Asia-Pacific users in Asia.

Such capabilities are possible with CockroachDB. Geo-partitioning grants developers row-level replication control. By default, CockroachDB lets them control which tables are replicated to which nodes. But with geo-partitioning, developers can control which nodes house data with row-level granularity.

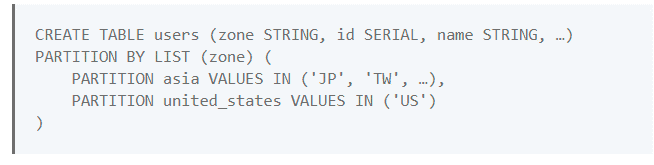

The approach is quite simple. To geo-partition a table, a developer must:

- Define location-based partitions while creating a table.

- Create location-specific zone configurations.

- Apply the zone configurations to the corresponding partitions.

The coding involved is minimal. For example, creating a partition for U.S. and Asian customers only requires the following:

Tables with data about a user, like orders, that should be subject to the same partitioning rules can be easily “interleaved” into the user’s table.

CockroachDB provides retailers the controls to set data location and thereby reduce latency. For example, table/database level replication zone configurations can be used to specify exactly in which localities the data in each table or database should or should not be placed.

Geo-partitioning allows retailers to specify configurations on subsets of a table. This can be used to denote exactly in which localities individual rows of a table should be placed based on the value of some column(s) in the row.

The bottom line: Tying retail data to locations can help meet granular local data privacy regulations and reduce global latencies to meet customer expectations for performance.

Salvatore Salamone is a physicist by training who has been writing about science and information technology for more than 30 years. During that time, he has been a senior or executive editor at many industry-leading publications including High Technology, Network World, Byte Magazine, Data Communications, LAN Times, InternetWeek, Bio-IT World, and Lightwave, The Journal of Fiber Optics. He also is the author of three business technology books.