What is responsible AI? Is it something that we’ll know when we see it? Or conversely, when we discover that AI causes harm rather than the good that was intended?

There is a groundswell of demand for clarification of and protection from these AI systems that are more and more involved in automating processes that used to require human involvement to make decisions. Organizations such as ISO, governments, and the non-profit, community-based Responsible AI Institute (RAII) are responding with certifications, frameworks for affirming compliance, and guidance on how to create and operate systems that rely on AI responsibly.

So, what is responsible AI? A widely accepted definition of responsible AI is AI that does not harm human health, wealth, or livelihood.

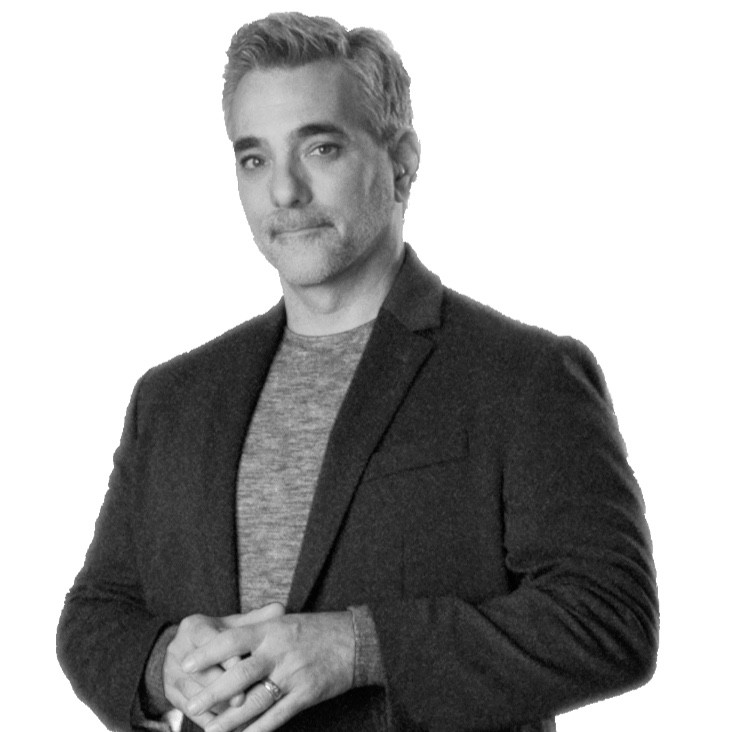

CloudDataInsights recently had the chance to interview Seth Dobrin, president of RAII, ahead of the AI Summit New York on the mission, the challenges, and the opportunities to bring together experts from academic research, government agencies, standards bodies, and enterprises in a drive toward creating Responsible AI.

This year, co-located with AI Summit, the RAISE event (in-person and virtual on December 7th, 5:30 to 7:30 p.m. EST) will recognize organizations and individuals who are at the forefront of this effort.

Note: The interview was edited and condensed for clarity.

Q 1: The mission of the Responsible AI Institute, as stated on its website, seems very clear and obvious. Are you finding that you have to go into detail or explain what responsible AI is and why we have a responsibility to uphold it?

For the most part, no one wants to create irresponsible AI. Most people want to create responsible AI, so inherently, that’s not the problem. However, some organizations struggle to make a business case for it and it gets deprioritized among all of the other things, especially in difficult times, like where we’ve been for the last few years.

Although difficult times tend to push organizations harder to do things like this. So we do have to spend a bit of time with some companies, some organizations, helping them understand why it’s important, why do it now, that there are business benefits from it, and then also, how the RAI Institute is different from other for-profit organizations in this space. We are the only independent, non-profit in this space that’s approved to certify against published standards.

Q2: The community-based model is central to your organization. You have the innovators, the policy makers, and the businesses that are trying to conform to responsible AI standards. Do you actively have to bridge the conversation between the researchers and the enterprises?

We’re a fully member-driven organization, and community is a big part of what we do. What the RAI Institute brings forward is not just the very deep expertise of the people we have employed, but it’s also the experience and opinion of the community.

The community includes academics, governments, standard bodies, and individuals that are part of corporations. Since we align to international best practices and standards, we do spend a lot of time with policy makers and regulators to help them understand the best practices and how they can validate that companies and organizations that they are, in fact, aligned to those regulations.

Q3: Once a Responsible AI framework is adopted, an AI audit only takes weeks which is a long way from the early days when AI was unexplainable to a certain degree or simply a black box phenomenon. What is the technology lift or investment that’s required of companies that want to certify their responsible AI?

A responsible AI audit is similar to a Service Organization Control 2 audit of customer data management or any other kind of audit process. Since the ultimate goal is to protect human health, wealth, or livelihood, it is more than likely going to involve some kind of certification and a third-party audit, especially once the regulations have matured. In any case, the starting point is a self-audit, a self-assessment.

You can at least attest that this piece of AI-enabled software is, in fact, responsibly implemented up to the sixth dimension (security). Then an organization whose AI usage doesn’t impact health, wealth, or livelihood, might stop at the self attestation and can declare that it’s not infringing on consumer protection, people are accountable for it, it’s fair, and it’s explainable.

Other organizations might want someone else to come in and validate the self-assessment, even if there’s no health care, life, or welfare impact. They have decided that it’s still important to them that their customers know that they care about responsible AI. The next level is having a third-party audit of your systems which would then qualify for a certification.

The space is still maturing. We’re not aware of a single third-party audit that’s been completed. The first one is being piloted by Canada’s AI Management System Accreditation Program. The pilot is based on ISO’s AI Management System standard (ISO 42001).

The RAI Institute doesn’t build technology to implement AI or to perform the audits. We build the assessments called conformity assessment schemes. These are aligned to global standards, and they ingest a lot of data from the technologies at play in the Ai system. Fairness metrics, bias metrics, explainability metrics, and robustness, for example, are all part of what is ingested for conformity assessments.

Q4: That you are ahead of the market is very important, but in this particular instance, do you feel the governments are running ahead of the users? For example, local and national governments are getting their policies and their fines structure in place without necessarily having the tools to enforce regulations.

I don’t think that’s necessarily unusual. If you look back at GDPR, it was very similar. While policy makers seem to be ahead of organizations these days, policy makers are not ahead of what society is demanding.

And so, in this case, I would argue that it’s the organizations that are behind what society is demanding, and regulators and governments are just helping to make sure that we, as individuals, as citizens of the world, have the protections that we need, that there’s appropriate accountability. That’s an important nuance–yes, they’re ahead of organizations, but what if organizations were doing this on their own? The regulations probably wouldn’t be coming as fast as they are.

Q5: What is the RAIInstitute’s number one goal in the coming year? And what challenges do you foresee?

As we move forward, our goal is to solidify itself as the de facto standard when you’re trying to assess, validate, or certify that your AI is, in fact, responsible. So essentially, enabling organizations to fast track their responsible AI efforts. That’s our focus.

I think that we’re focused on two different types of organizations. We’re looking at organizations that are building and buying AI and how we can help them. And we’re looking at providers that are building and supplying AI-enabled software and helping them be able to bring their tools to bear in a responsible manner.

For both, we’re ensuring that the AI systems are appropriately mature and they have the right policy and governance in place and understand the regulatory landscape. If companies think in that mindset, it’ll help them really know how they’re applying their tools appropriately.

Our biggest challenge is confusion in the market, which thinks that software providers can get organizations what they need, when really, software tools are just one piece of the puzzle. They don’t get them where they need to go.

They also need standards, much like we use ISO or NIST as standards, but that are being developed for AI, and they need a way to show that they’re aligned and complying with those standards.

The RAI Institute’s conformity assessments demonstrate that an organization is, in fact, aligning to standards. The standard capsule of the Canadian AI management program is the only AI standard with an approved conformity assessment that exists today. Canada, ISO, IEC (ISO/IEC 42001), and others have agreed to harmonize their standards to make it easier to validate and certify responsible AI.

We’re seeing these rolling out globally over the next 12 to 18 months. Soon after that, we’ll see the EU AI Act being completed. If we extrapolate from GDPR, two years after the AI Act goes into effect is when it’ll be enforceable. So I think we’ll have two years to get certifications bodies up and running so that organizations have some way to validate that they are aligning to regulations.

Q6: Another point of confusion is around AI itself and what it means to enable an application with AI. Have you seen companies claim or deny that an application is using AI?

When we talk about AI, we have to keep in mind that rules have been being used for a long time in systems. In fact, if you look at New York City’s automated employment decision tools (AEDT) law, it’s not an AI tools act, it’s an automated employment act, and applies to rules-based automation as well as AI-enabled automation. From our perspective, the conformity assessment can be used for systems that use AI or rules, and that’s how it should be, especially when there’s an impact on health, wealth, or livelihood.

Any time any kind of automated system is being used for healthcare decisions, job descriptions, or finance decisions, you need some kind of assessment of whether it is being done responsibly because rules can be just as unfair as AI. The math itself is not unfair, not biased. It’s the past decisions that humans have made that are, and those past decisions are still ingrained in rules. When I talk about AI, rules are part of it. By the way, some people refer to rules as “symbolic AI.” It’s the automation of decision-making, whether enabled by rules or AI, that is what is giving rise to demands for responsible AI.

Elisabeth Strenger is a Senior Technology Writer at CDInsights.ai.