MLOps workflows work on the back of orchestration pipelines that enable automated execution.

Automated execution, in the context of this article, is the execution of a predefined flow (pipeline),

often driven by parameters and/or environment variables or conditions that acts against a modified

source.

The pipeline is triggered by an action, such as a new code commit or training data pushed into

the system, or on a schedule. The pipeline defines and prepares the environment for each stage of the

workflow. MLOps workflows use standard DevOps tools for all non-ML specific functionality, so if you

have experience with continuous integration, this mindset will be very familiar.

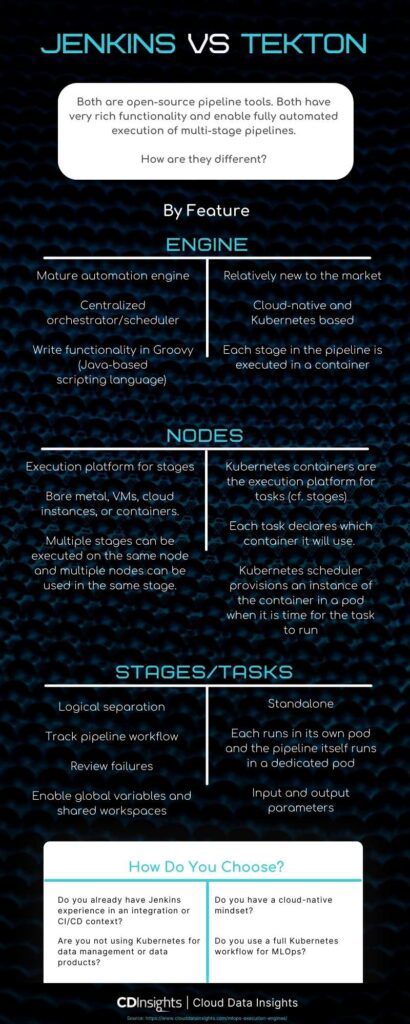

Two of the popular open-source pipeline tools are Jenkins and Tekton. While they are both used for the same purpose, the methodology/philosophy that each one uses is very different. I am going to bypass the religious war that so often happens when discussing competing technologies and help you understand what the practical differences are to help you decide which one is the best fit.

Engine

Jenkins is a mature automation engine, heavily used in industry, mostly for continuous integration and automated testing. It is a centralized orchestrator/scheduler that fully controls all the flows in its jobs. It can be self-contained or hosted on Kubernetes, but in either case, it retains full control. Pipeline development and all additional functionality needed for the job are written in Groovy (a Java-based scripting language). You can also develop a shared library, which will enable multiple jobs/Jenkins servers to reuse code.

Tekton is relatively new to the market and is cloud-native – fully integrated with Kubernetes and written with a Kubernetes mindset. Each stage in the pipeline is a task that involves the execution of a container. There is the possibility to add a script that will be executed by the container as well. The development language is whatever is supported by the container. There are shared tasks that enable the reuse of code.

See also: What is MLOps? Elements of a Basic MLOps Workflow

Triggering

Jenkins uses plugins to integrate with external systems that can trigger its pipelines. Examples of this are

github, gitlab and artifactory through which code changes or file uploads can trigger the pipeline to run

and act on the new data. Jenkins also has a cron (date/time-based scheduling) plugin, so that jobs can

be scheduled for a specific time. Jenkins also includes a REST API, through which jobs can be triggered by

systems that aren’t integrated with plugin. Finally, jobs can be started manually from within the Jenkins

GUI.

Tekton uses a Kubernetes Event Listener object to trigger its jobs. For each pipeline, an http endpoint

can be defined, through which the parameters can be passed. For date/time-based scheduling, the

Kubernetes cron can bescheduled top send an http call (using curl) to the event listener. Pipelines can be

triggered manually as well, either from the GUI or the tkn command line tool.

Nodes

Jenkins uses nodes as an execution platform for stages. These nodes can be bare metal, virtual machines (pets), cloud instances (cattle), or containers. They each have software installed that connects them to the Jenkins server. The nodes can be categorized on the Jenkins server as labels so that each pipeline can define what type of node it wants for each stage without having to dedicate a specific instance. This is very flexible/ as multiple stages can be executed on the same node, and multiple nodes can be used in the same stage.

Tekton uses Kubernetes containers as the execution platform for its tasks. Each task declares which container it will use. The Kubernetes scheduler then provisions an instance of the container in a pod when it is time for the task to run.

Stages/Tasks

Jenkins allows a logical separation of its pipeline with the usage of stages. This enables easy tracking of where the pipeline is at any given time and the ability to review any failures. However, they are all fully integrated aspects of the pipeline entity. This gives the ability to use global variables and shared workspaces natively.

Tekton pipelines are divided into standalone Tasks. The pipeline itself is executed in one pod, and each task is executed in its own pod. Each task has input and output parameters. If you want to use a shared workspace, you need to allocate a persistent Kubernetes volume (PVC) and use that as a mounted volume in each task.

Logging

Jenkins has a full pipeline console log that can be viewed from the server. Using the Blue Ocean plugin will give a visual of the pipeline stages, in which the logs of each stage can be viewed individually. You can define a data retention policy so that the logs/execution history will eventually get cleaned up, but they are there forever by default.

Tekton has logs in each of its pods, and they are also accessible using the tkn command line tool. However, the logs are only accessible while the pods are still there. Once Kubernetes deletes the pods, the logs are gone. For longer-term log storage and analyzation, an additional tool must be used, such as an ELK log analysis and visualization stack.

Integrations

Jenkins has a very rich ecosystem of plugins that pipelines can integrate with. This adds almost endless functionality to the pipeline. The plugins can generally be configured and then referred to as objects from anywhere in the pipeline.

Tekton has a hub where common tasks are shared. The tasks extend the functionality in a similar way to Jenkins plugins but generally require more integration effort, as each one is standalone, as mentioned above.

Summary

Both Jenkins and Tekton have very rich functionality and enable the fully automated execution of multi-stage pipelines. The decision on which one you choose will depend on a number of factors, including what the rest of your ML toolset is comprised of and what kind of experience your staff has. If you are in a cloud-native mindset, using a full Kubernetes workflow, you will probably want to use Tekton. However, if you have a lot of Jenkins experience and are not (fully) using Kubernetes for everything else, you will probably want to stick with Jenkins.

Sim Zacks is a DevOps Architect at GM, working on connecting the pieces to deliver the software for the autonomous vehicle. His 25+ years of experience include multiple technology fields including DevOps/CI-CD, software development, system & network administration, database development & design, quality engineering and leading large projects. Sim uses his knowledge & experience to seamlessly connect and translate all aspects of the technology stack with business requirements. He is a mentor & strategist, working with people to overcome everyday challenges and further their careers. He writes a blog on personal strategy, which can be found on his LinkedIn profile. He is also a speaker for local and international conferences. Sim earned an MBA from the University of Phoenix and a BSc in Computer Science from Lawrence Technological University.