At AWS re:Invent 2022, Werner Vogels announced EventBridge Pipes, a service targeted at simplifying real-time integrations. A plethora of integration patterns already exist in AWS’s tool arsenal, including API gateways, message topics and queues, event buses, orchestration tools, and streams. Understanding yet another communication service’s unique value proposition within AWS’s ecosystem can pose a challenge. This article explores EventBridge Pipes’ position in the AWS integration toolbox and the class of problems it simplifies.

Note that this article does assume some basic knowledge about EventBridge buses, event rule evaluations, and message queue and data stream mechanics.

See also: AWS re:Invent Keynote: EDA and Loosely Coupled Systems

What is EventBridge Pipes

Since EventBridge Pipes is so new, the prudent place to start might be the documentation’s description of the feature:

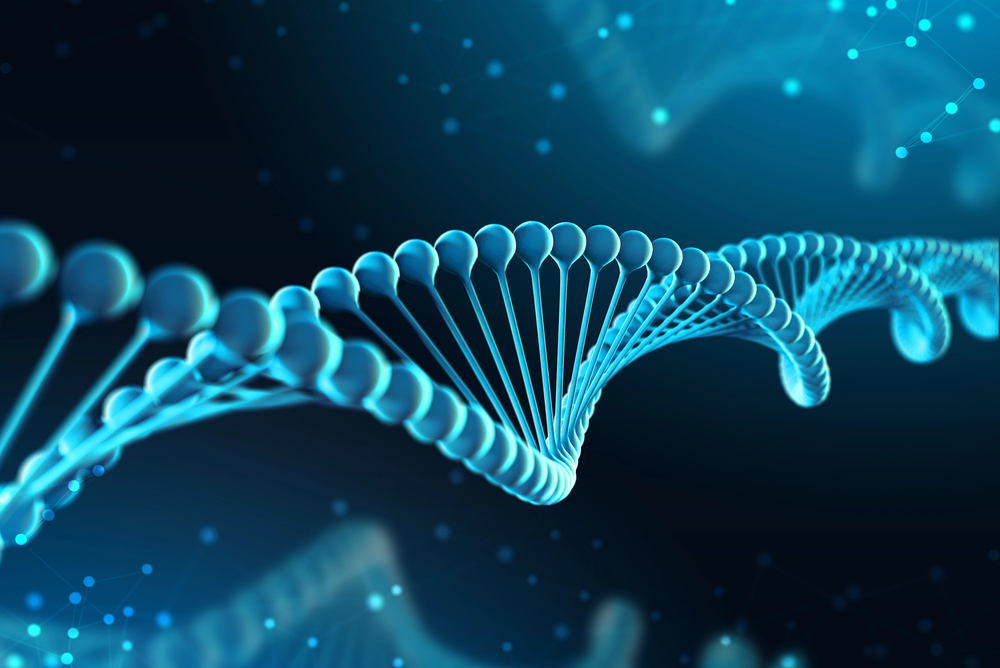

Amazon EventBridge Pipes connects sources to targets. It reduces the need for specialized knowledge and integration code when developing event-driven architectures, fostering consistency across your company’s applications. To set up a pipe, you choose the source, add optional filtering, define optional enrichment, and choose the target for the event data.

The documentation also includes the following graphic illustrating the functionality enabled by an EventBridge pipe:

This description of EventBridge Pipes paints the picture of an all-purpose adapter as a service. It would seem that, like the value proposition of EventBridge buses and rules, Pipes help decouple producer and consumer systems.

This seems a little familiar…

Before proceeding, I want to contrast this feature description with one from the EventBridge features page describing event buses:

EventBridge makes it easier for you to build event-driven application architectures. Applications or microservices can publish events to the event bus without awareness of subscribers. Applications or microservices can subscribe to events without awareness of the publisher. This decoupling helps teams work independently, leading to faster development and improved agility.

In other words, the value propositions for traditional EventBridge buses and new EventBridge Pipes are similar.

Both constructs decouple producer systems from consumer systems. Both enable event filtering and payload transformation.

There are some differences…

Notwithstanding the similarities in the value propositions of EventBridge buses and pipes, there are some significant differences that help us decide which EventBridge primitive to use.

Supported Services

The list of supported services for EventBridge Pipe sources tells us a lot about the use cases EventBridge Pipes aims to enable in the AWS communication ecosystem. The six supported Event Sources are:

- Amazon DynamoDB stream

- Amazon Kinesis stream

- Amazon MQ broker

- Amazon MSK stream

- Self managed Apache Kafka stream

- Amazon SQS queue

Integrate more easily with existing services

Prior to the release of EventBridge Pipes, engineers who wished to process events in a stream or queue and leverage EventBridge’s filtering and delivery capabilities needed to write their own stream processor or a queue listener. EventBridge Pipes allows users to leverage EventBridge’s filtering and routing capabilities without writing any additional integration code.

Eventbridge Pipe sources are all pull-based

AWS architects might notice two event sources conspicuously absent from the above list: Amazon SNS topics and EventBridge Buses. Although EventBridge Pipes can deliver events to SNS topics and EventBridge buses, they are not among the supported event sources.

This supported list of event sources highlights important new functionality available in EventBridge, but it is an exhaustive list and therefore highlights some of the limitations of pipes at the same time.

Those intimately familiar with the AWS Lambda programming model might notice that the six supported event sources for EventBridge Pipes are identical to the services you can process with a Lambda Event Source Mapping.

The similarities between Lambda Event Source Mapping sources and EventBridge Pipes sources might be coincidental. Likely, they are the result of EventBridge Pipe sources leveraging Lambda Event Source Mappings under the hood. At the very least, however, I suspect that there is some architectural inspiration that the EventBridge Pipes team drew from the Lambda Event Source Mapping construct.

While EventBridge buses and pipes both decouple producer and consumer systems, pipes do so with a different invocation mechanism to buses. While systems must publish events to EventBridge buses, pipes fetch events from existing queues and streams.

Why This Matters

Ordered Event Delivery

Potentially the largest weakness of EventBridge buses historically has been the inability to invoke consumers in the same order that a producer emitted events. When an EventBridge pipe processes a stream or FIFO queue, it does guarantee the ordered delivery of events. This makes EventBridge Pipes feasible for an array of use cases that buses are unable to handle. The classic example of such a use case might be processing financial transactions.

Because pull-based event sources like FIFO queues and streams have been designed with ordering guarantees in mind, Pipes, like Lambda Event Source Mappings, can listen to these streams and process messages in order. EventBridge Pipe execution throughput is therefore impacted by the configuration of the source pull-based event source as described in AWS documentation:

Pipes with strictly ordered sources, such as Amazon SQS FIFO queues, Kinesis and DynamoDB Streams, or Apache Kafka topics) are further limited in concurrency by the configuration of the source, such as the number of message group IDs for FIFO queues or the number of shards for Kinesis queues. Because ordering is strictly guaranteed within these constraints, a pipe with an ordered source can’t exceed those concurrency limits.

Batched Event Delivery

Another weakness of EventBridge buses is that rules process a single event at a time. Enriching a few thousand DynamoDB write events in a single process is likely going to be far cheaper and more performant than spawning a few thousand different processes to process a single event each.

EventBridge Pipes takes advantage of its pull-based invocation model to enable configurable batch sizes such that engineers can take advantage of the economies of scale provided by the batch processing features of stream and queue event sources.

These economies of scale reflect in EventBridge Pipes Pricing: [Source]

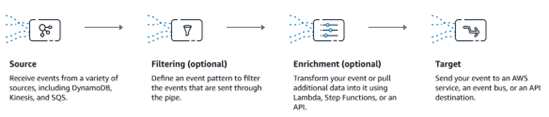

Note that the process of batching requests enables multiple events to join together to form a single request for billing purposes. This is in contrast with EventBridge API Integration and EventBus publishing pricing, where the following notice is present:

EventBridge Pricing Disclaimer for EventBridge Buses

Note that although large events count as multiple requests in EventBridge buses, smaller batched events do not join together to form a single request from a pricing standpoint.

Eventbridge Pipes Support Built-in Enrichment prior to delivery

The last notable difference between traditional EventBridge event rules is that pipes support enrichment with lambda functions prior to event delivery. This is a really fantastic feature, and I’m honestly sure why AWS has not added the functionality to EventBridge rules yet.

There are some existing patterns that exist that utilize lambda functions to enrich EventBridge events. The patterns are clunky, however, and often add complexity and introduce additional costs to the enrichment process. I would definitely appreciate taking inspiration from EventBridge Pipes and pulling this functionality back into EventBridge event rules.

Conclusion

EventBridge Pipes are not streams or queues. They do, however, pull events from streams and queues, filter these events, enrich these events, and deliver these events to target services.

EventBridge Pipes are not workflow engines, but each pipe performs a simple workflow of four steps. These steps consist of fetching events, optionally filtering these events, optionally enriching these events, and delivering these events to a target service. Enriching these events can be performed by Step Functions State Machines. Step functions is a workflow engine.

EventBridge Pipes are not API Gateways or event buses, or message topics. These utilize push-based invocation mechanisms, while EventBridge Pipes uses a pull-based invocation model.

EventBridge Pipes should be used to process, filter, enrich, and deliver events that require batched processing or preserved ordering. They can also be used to ingest events from non-event bus sources into any of a set of supported AWS targets, including:

- API destination

- API Gateway

- Batch job queue

- CloudWatch log group

- ECS task

- Event bus in the same account and Region

- Firehose delivery stream

- Inspector assessment template

- Kinesis stream

- Lambda function (SYNC or ASYNC)

- Redshift cluster data API queries

- SageMaker Pipeline

- SNS topic

- SQS queue

- Step Functions state machine

- Express workflows (ASYNC)

- Standard workflows (SYNC or ASYNC)

Although lambda functions can directly be invoked by the same sources using Event Source Mappings, leveraging EventBridge Pipes enables filtering and enriching these events prior to the invocation of the target lambda functions. Filtering these events using EventBridge Pipes can save Lambda runtime costs and significantly simplify the destination lambda function code.

With the new EventBridge Pipes capabilities in our tool belts, we can significantly simplify integrations, further reduce the operational footprint of serverless applications, and save time and money along the way. I don’t know about you, but I’m excited.

Yehuda Cohen is the CTO at Foresight Technologies, a professional services company focused on digital transformation and cloud infrastructure. Yehuda is obsessed with fostering nimble and effective engineering organizations capable of executing simple solutions to challenging problems. With a software engineering background, Yehuda remains fascinated with distributed software and systems, hybrid-cloud infrastructure, DevOps, and machine learning. Yehuda holds both the AWS Solutions Architect Professional and the AWS DevOps Engineer Professional certifications and is an AWS Community Builder focused on Cloud Operations and writes a cloud-focused blog at Fun With the Cloud.